|

I am research scientist at Google DeepMind working on robotic manipulation. I earned my PhD at MIT working with Prof. Alberto Rodriguez. I develop algorithms and solutions that enable robots to solve new tasks with high accuracy and dexterity. My research was supported by LaCaixa and Facebook fellowships. {first_name}0bauza@gmail.com / CV / Bio / Google Scholar / LinkedIn |

|

|

My research focuses on developing algorithms for precise robotic generalization: making robots capable of solving many tasks without compromising their performance and reliability. By learning general AI models of perception and control, we can provide robots with the right tools to thrive in diverse environments and task requirements. In my work, I have studied how learning AI models allows precise control, and how developing accurate visuo-tactile perception enables solving complex tasks, such as grasping, localization, and precise placing without prior experience. My goal is to continue developing algorithms that make robots dexterous and versatile at manipulating their environment.

|

|

|

December 2025 Invited talk at Deep Learning Barcelona Symposium (video). October 2025 Invited talk at LaCaixa Becarios Knowledge Day. October 2025 Invited talk at IROS Workshop Learning from Teleoperation. October 2025 Invited talk at IROS Workshop Tactile Sensing Toward Robot Dexterity and Intelligence. June 2025 Invited talk at A Robot Touch of AI: London Summer School in Robotics & AI 2025. May 2025 Tech Talk at ICRA Stage Presentations. May 2025 Invited talk at ICRA Workshop Beyond Pick and Place". May 2025 Invited talk at Microsoft Cortex AI Research Talk Series. March 2025 Invited guest lecture at Oxford Saïd Business School MBA. November 2024 Invited talk at CoRL Workshop on Learning Robotic Assembly. October 2024 Invited talk at DevFest Menorca. August 2024 Invited talk at Forum Illa del Rei on AI. June 2024 Invited talk at Oxford Business school Analytics and AI class. May 2024 Invited talk at ICRA Workshop Vitac. April 2024 Invited talk at ETH lab seminar. December 2023 Invited talk at Deep Learning Barcelona Symposium. December 2023 Invited talk and panel at Balearic Ecosystem for AI. July 2023 Guest lecturer at Oxford’s MBA class on Machine Learning for Business. January 2023 Guest interview at UK Robotics and Autonomous Systems Network’s podcast, Robot Talk. September 2022 Invited talk at the RSS 2022 workshop on The Science of Bumping Into Things. August 2022 Thesis defense: Visuo-tactile perception for dexterous robotic manipulation (video). |

|

Z. Si, J. Chen, E. Karagozler, A. Bronars, J. Hutchinson, T. Lampe, N. Gileadi, T. Howell, S. Saliceti, L. Barczyk, I. Correa, T. Erez, M. Shridhar, M. Martins, K. Bousmalis, N. Heess, F. Nori, M. Bauza submitted to ICRA 2026 PDF / website We present ExoStart, a general and scalable learning framework that leverages the power of human dexterity for robotic hand control. In particular, we obtain high-quality data by collecting direct demonstrations using an low-cost exoeskeleton, capturing the rich behaviors that humans naturally can demonstrate. |

|

Gemini Team Technical Report PDF / website Gemini 2.5 Pro is Google DeepMind's most capable model yet, achieving SoTA performance on frontier coding and reasoning benchmarks. Gemini 2.5 Pro is also a thinking model that excels at multimodal understanding and it is now able to process up to 3 hours of video content. |

|

Gemini Robotics Team Technical Report PDF / website Gemini Robotics is an advanced Vision-Language-Action (VLA) generalist model capable of directly controlling robots. Gemini Robotics executes smooth and reactive movements to tackle a wide range of complex manipulation tasks, handling unseen environments as well as following diverse, open vocabulary instructions. |

|

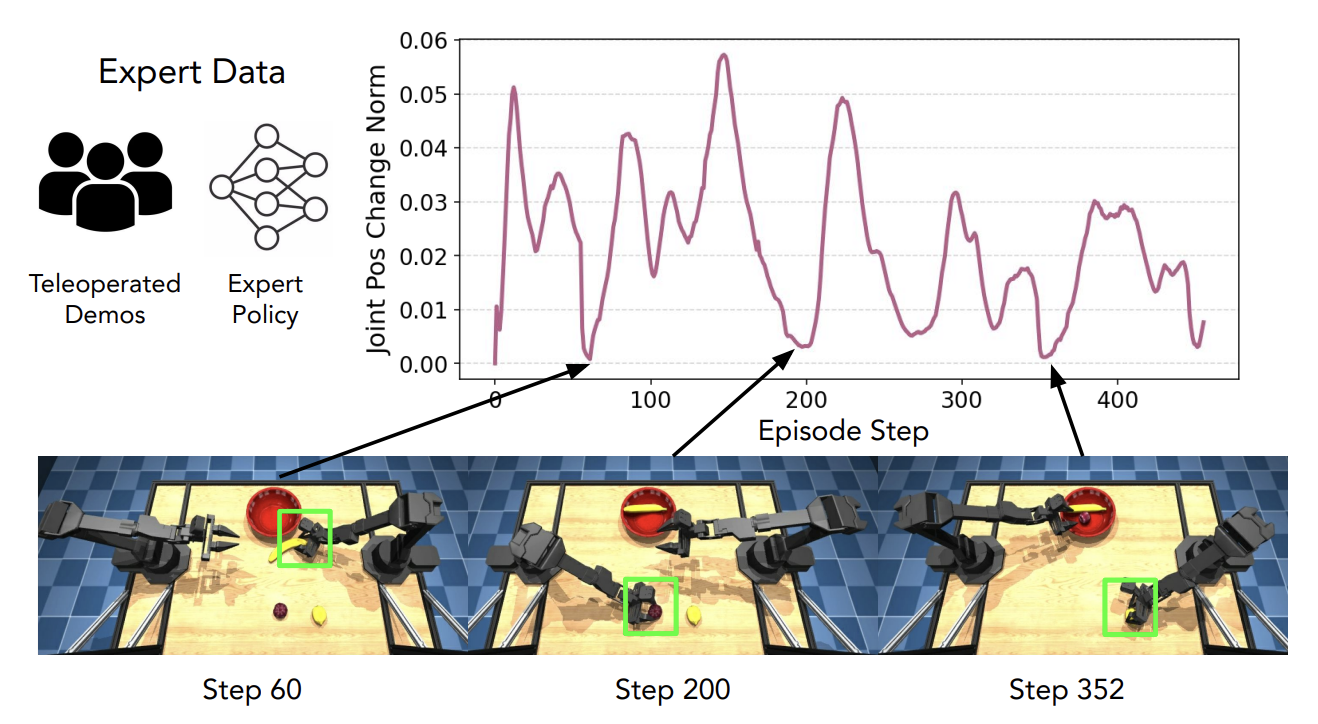

A. Chen, P. Brakel, A. Bronars, A. Xie, S. Huang, O. Groth, M. Bauza, et al. IROS , 2025 We investigate how to leverage the detectability of idling behavior to inform exploration and policy improvement. Our approach, Pause-Induced Perturbations (PIP), applies perturbations at detected idling states, thus helping it to escape problematic basins of attraction. |

|

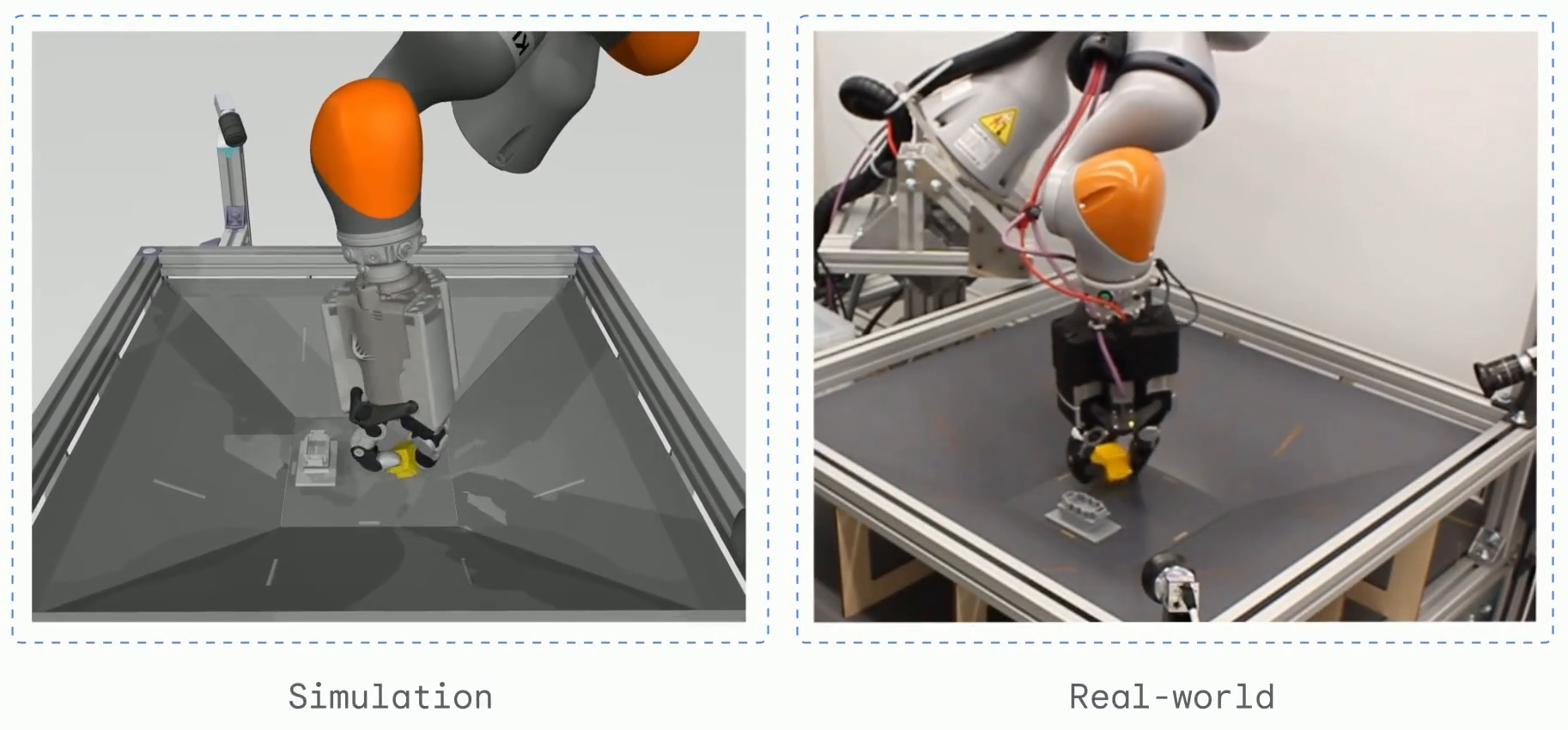

M. Bauza, J. Chen, V. Dalibard, N. Gileadi, et al. ICRA 2025 PDF / website DemoStart is an auto-curriculum reinforcement learning method capable of learning complex manipulation behaviors on an arm equipped with a three-fingered robotic hand, from only a sparse reward and a handful of demonstrations in simulation. |

|

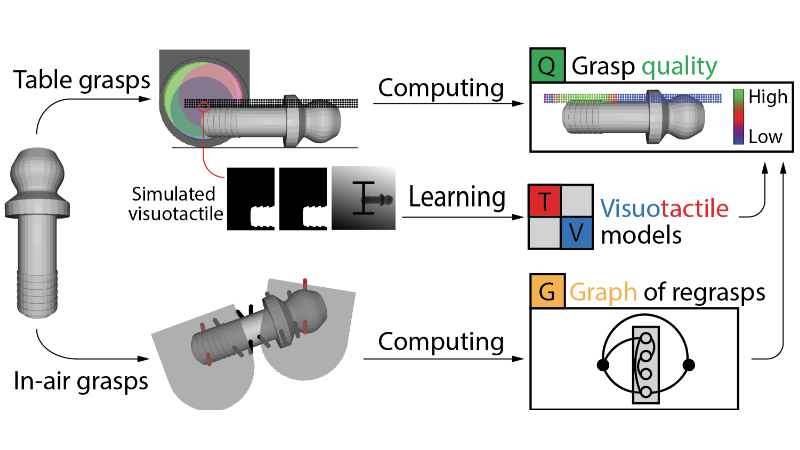

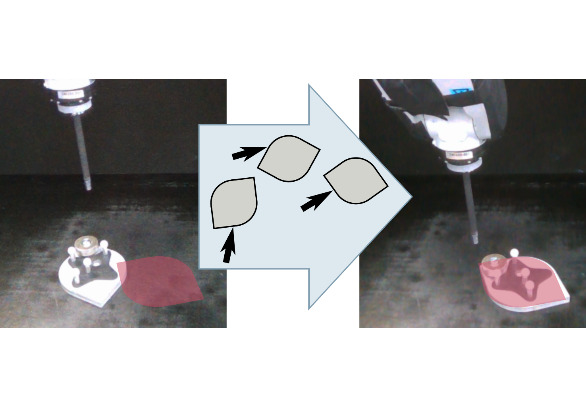

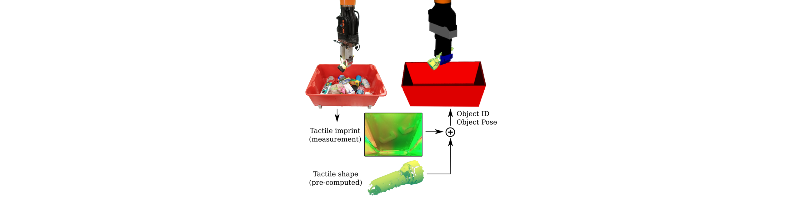

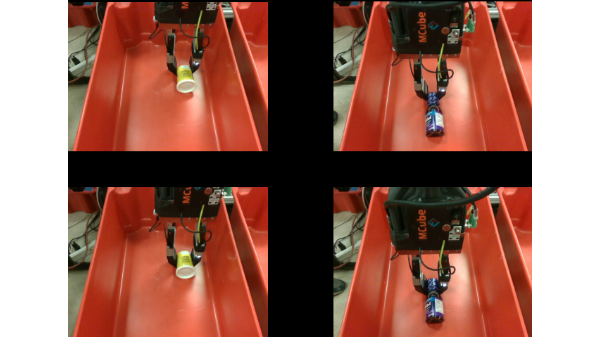

M. Bauza, T. Bronars, Y. Hou, I. Taylor, N. Chavan-Dafle, A. Rodriguez Science Robotics , 2024 PDF / website We learn in simulation how to accurate pick-and-place objects with visuo-tactile perception. Our solution transfers to the real world and succefully handles diferent types of objects shapes without requiring prior experience. |

|

Jacky Liang, Fei Xia, Wenhao Yu, Andy Zeng, Montserrat Gonzalez Arenas, Maria Attarian, Maria Bauza, et al. RSS, 2024 PDF / website Language Model Predictive Control (LMPC) is a framework that fine-tunes PaLM 2 to improve its teachability on 78 tasks across 5 robot embodiments. LMPC accelerates fast robot adaptation via in-context learning. |

|

K. Bousmalis, G. Vezzani, D. Rao, C. Devin, A. Lee, M. Bauza, et al. TMLR, 2023 PDF / website We introduce a self-improving AI agent for robotics, RoboCat, that learns to perform a variety of tasks across different arms, and then self-generates new training data to improve its technique. |

|

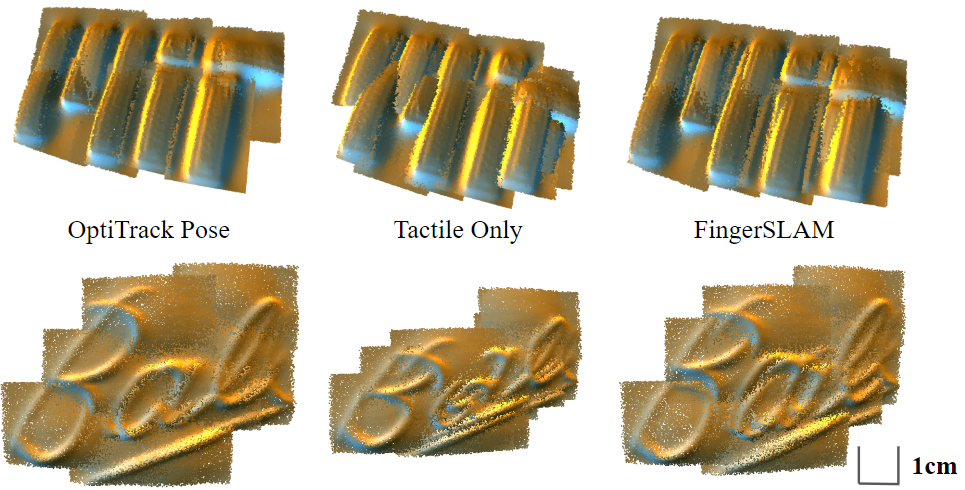

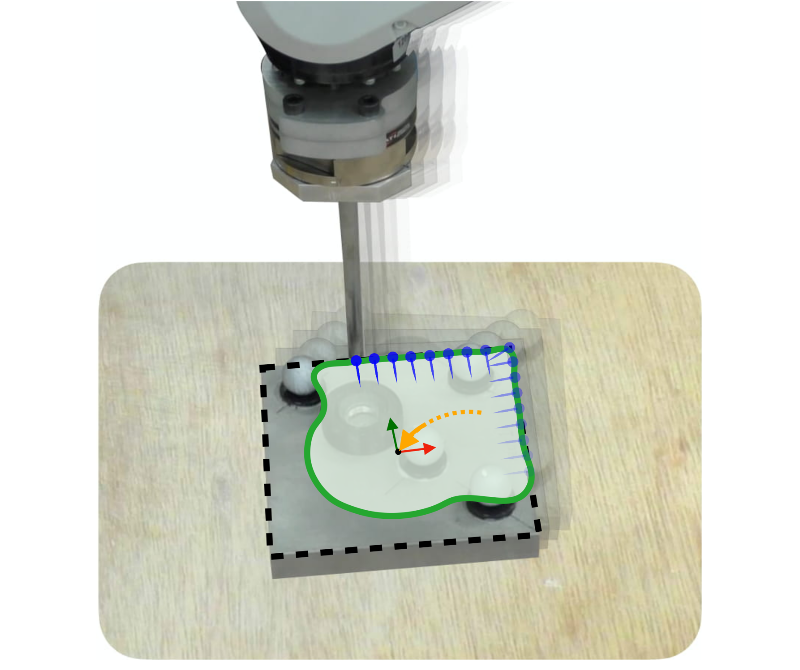

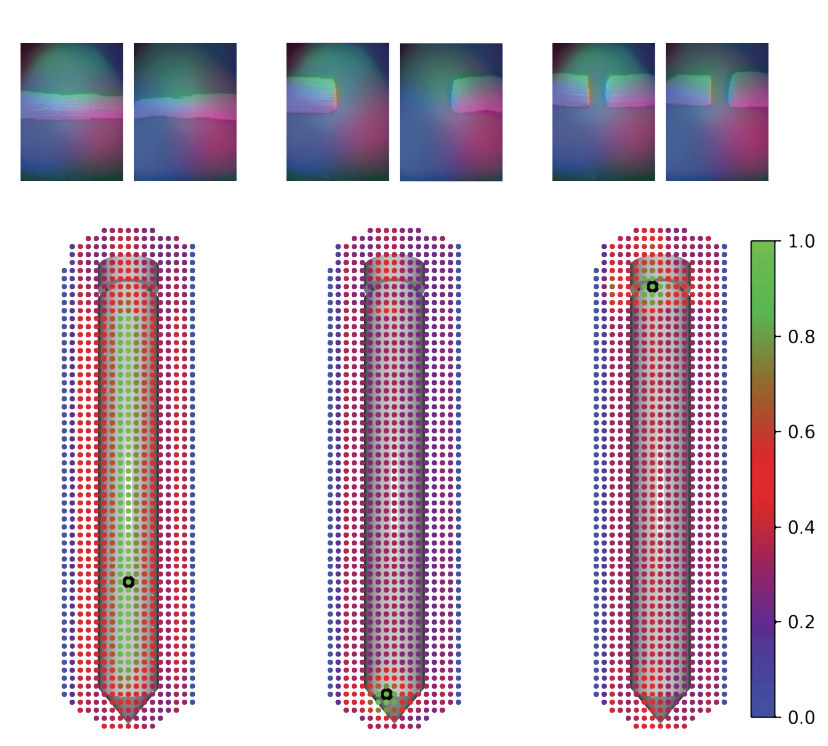

J. Zhao, M. Bauza, E. Adelson under review, 2022 We address the problem of using visuo-tactile feedback for 6-DoF localization and 3D reconstruction of unknown in-hand objects. |

|

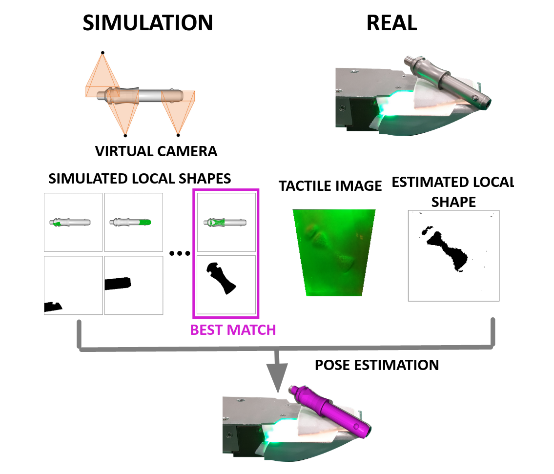

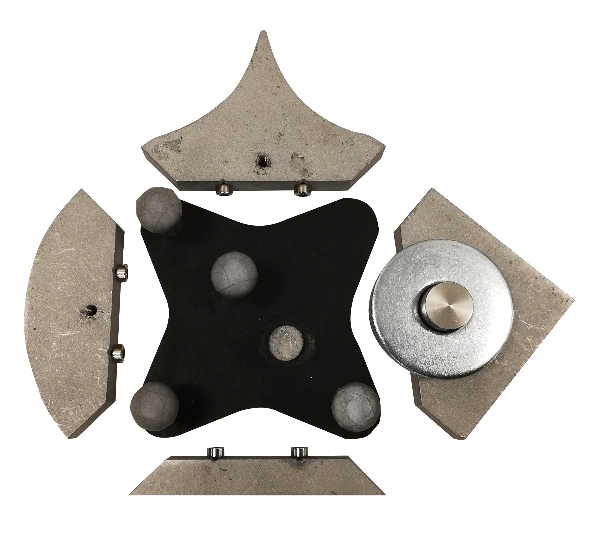

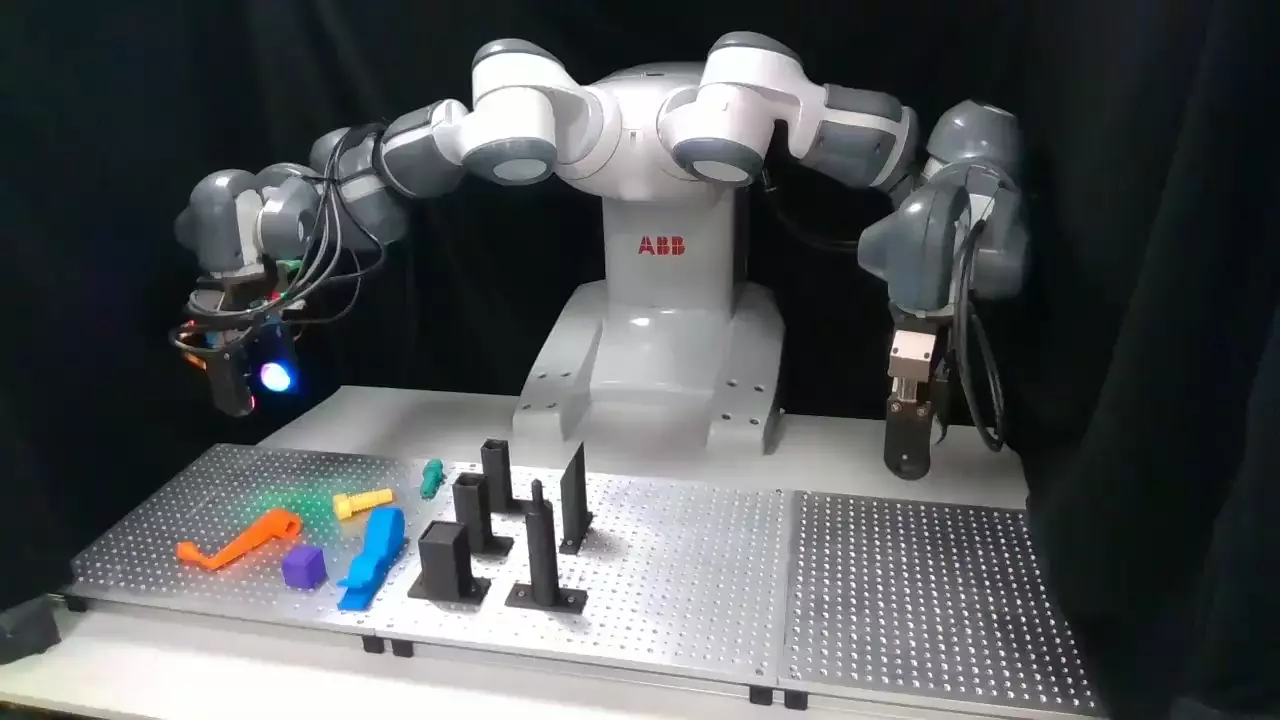

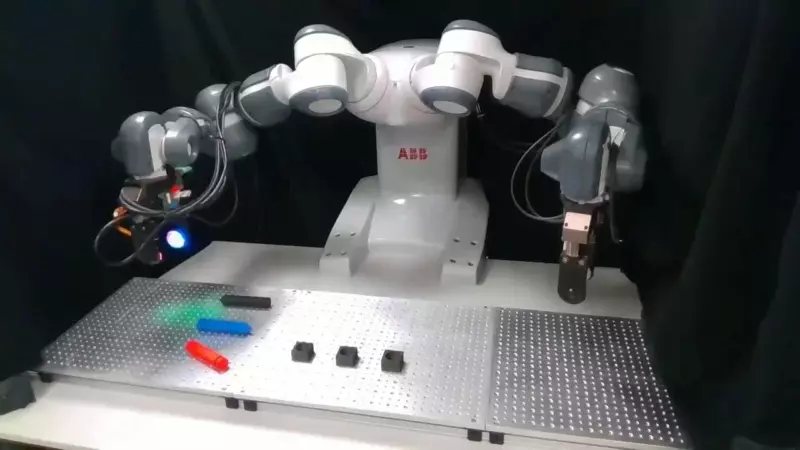

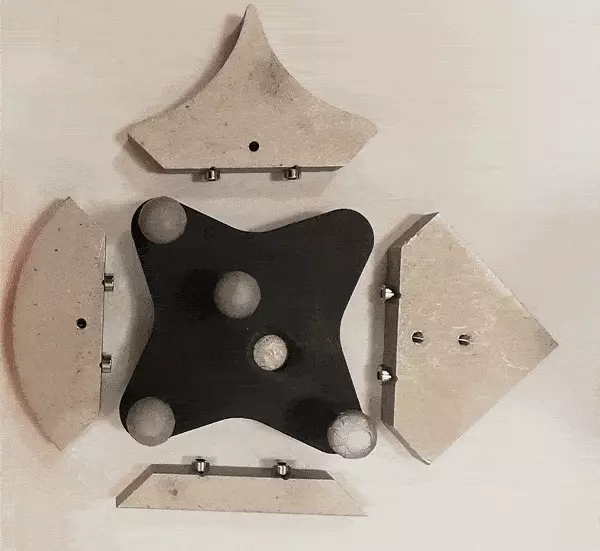

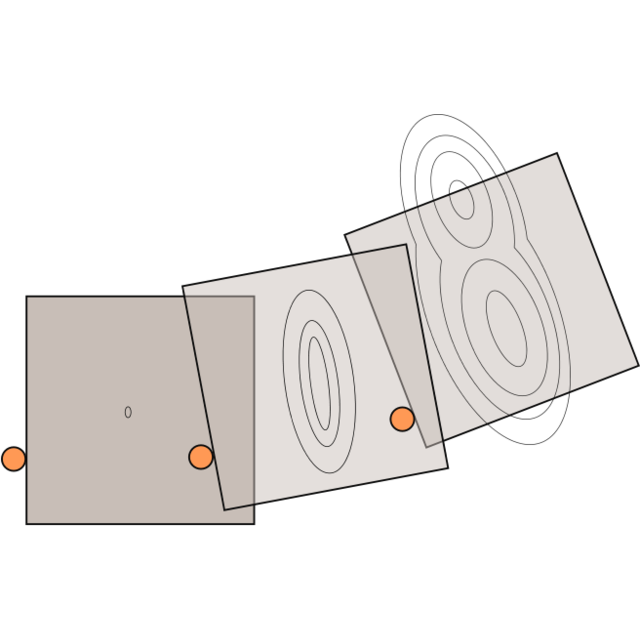

M. Bauza, T. Bronars, A. Rodriguez under review, 2022 We learn in simulation how to accurate localize objects with tactile. Our solution transfers to the real world, providing reliable pose distributions from the first touch. Our technology is used by Magna and ABB and MERL. Our tactile sensor is Gelslim. |

|

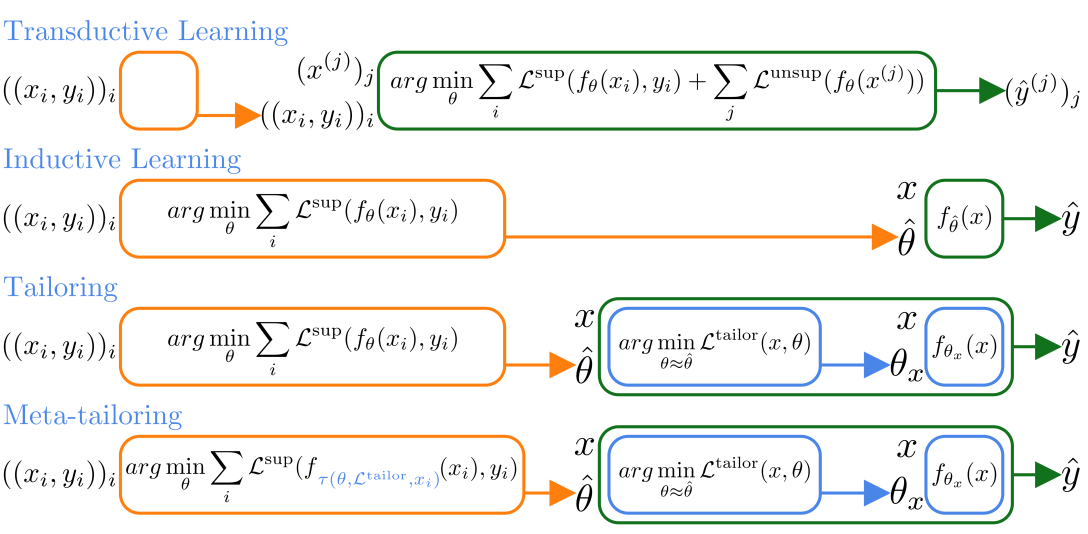

F. Alet, K. Kawaguchi, M. Bauza, N. Kuru, T. Lozano-Perez, L. Kaelbling NeurIPS, 2021 We optimize unsupervised losses for the current input. By optimizing where we act, we bypass generalization gaps and can impose a wide variety of inductive biases. |

|

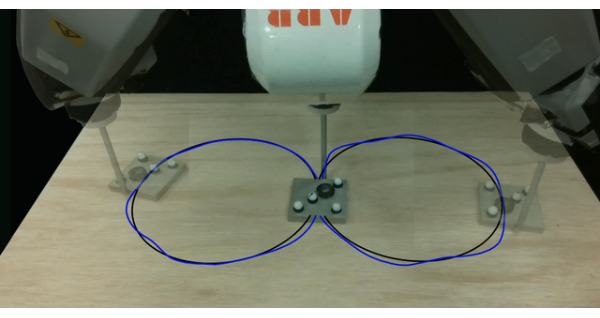

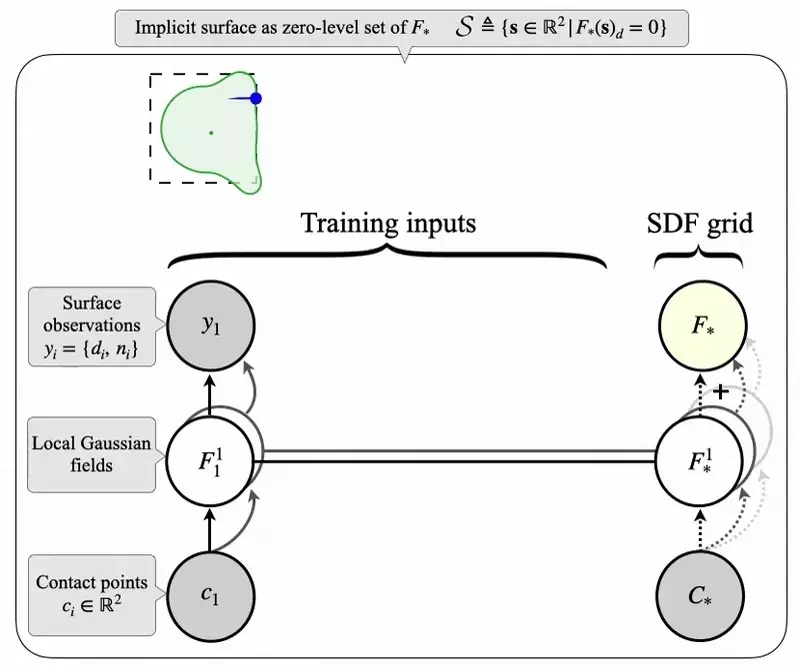

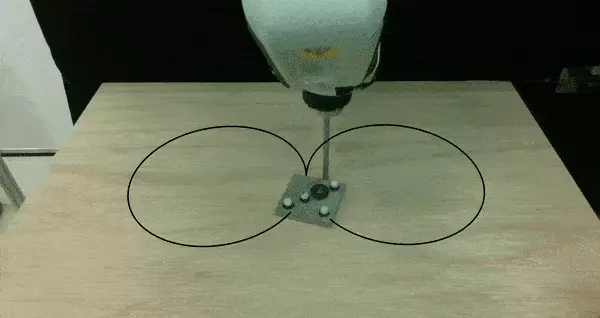

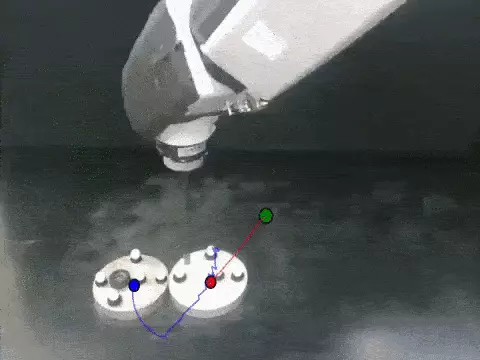

S. Suresh, M.Bauza, A. Rodriguez, J. Mangelson, M. Kaess ICRA, 2021 (Best Paper Finalist on the ICRA21 Service Robotics Award) PDF / video / code / website In real-time, we infer from planar pushes both the shape and pose of an object. |

|

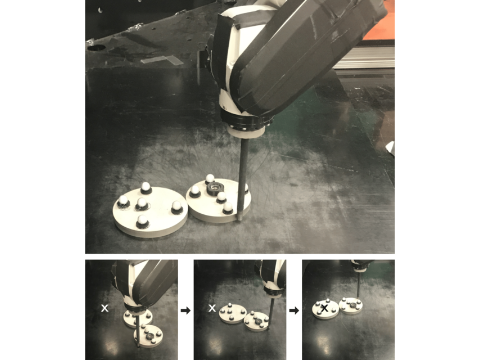

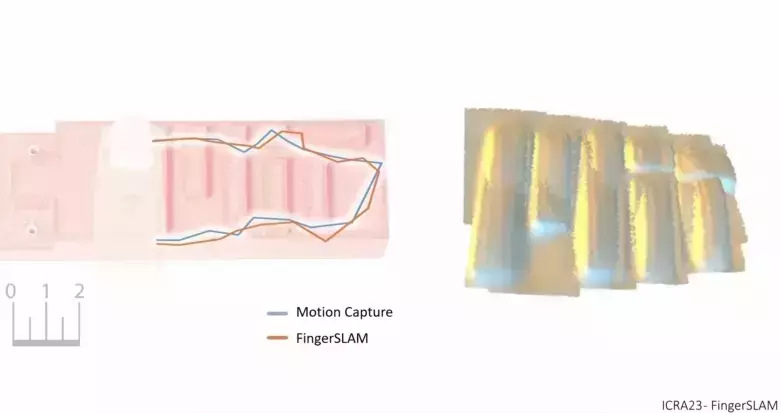

M. Bauza, E. Valls, B. Lim, T. Sechopoulos CORL, 2020 PDF / video / website |

|

A. Kloss, M. Bauza, J. Wu, J. Tenenbaum, A. Rodriguez, J. Bohg ICRA, 2020 PDF / video |

|

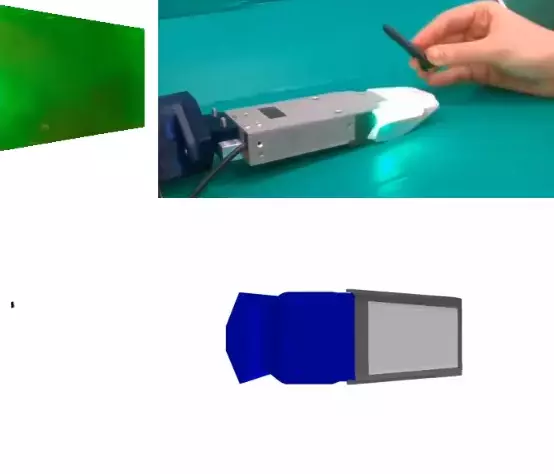

M. Bauza, O. Canal, A. Rodriguez ICRA, 2019 PDF / video / website Shape reconstruction and object localization using the vision-based tactile sensor GelSlim. |

|

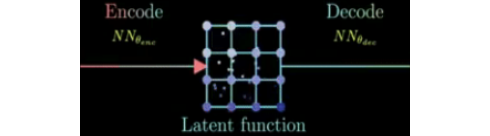

Y. Lin, M. Bauza, P. Isola CORL, 2019 PDF / code / website Learning to encode new objects to generate physically plausible video predictions. |

|

M. Bauza*, F. Hogan* , O. Canals, A. Rodriguez IROS, 2018 (Best Poster Award at ICRA 2018 workshop) PDF / video Regrasping using a high-resolution tactile sensor to improve grasp stability. |

|

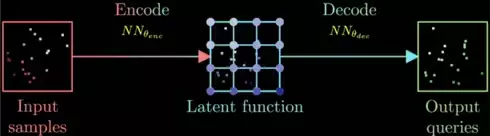

F. Alet, A. Jeewajee, M. Bauza, A. Rodriguez, T. Lozano-Perez, L. Kaelbling ICML, 2019 (Oral Presentation) PDF / video / website We learn to map functions to functions by combining graph networks and attention to build computational meshes and show this new framework can solve very diverse problems. |

|

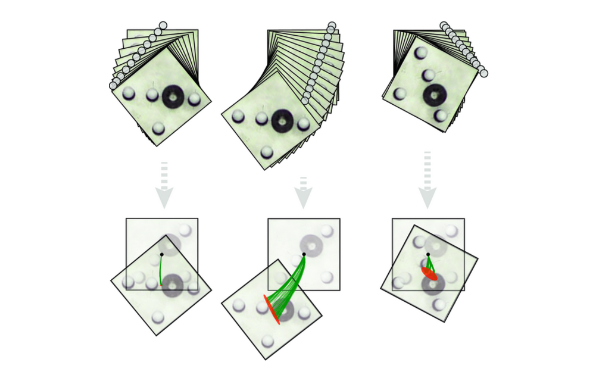

M. Bauza, F. Alet, Y. Lin, T. Lozano-Perez, L. Kaelbling, P. Isola, A. Rodriguez IROS, 2019 PDF / website We present a large high-quality dataset on planar pushing that includes RGB-D video and extense object variability. |

|

M. Bauza*, F. Hogan* , A. Rodriguez CORL, 2018 PDF / video We explore the data-complexity required for controlling, rather than modeling, planar pushing. |

|

A. Ajay, M. Bauza, J. Wu, N. Fazeli, J. Tenenbaum, A. Rodriguez ICRA, 2019 PDF / website We propose a hybrid dynamics model, simulator-augmented interaction networks (SAIN), combining a physics engine with an object-based neural network for dynamics modeling. |

|

A. Ajay, J. Wu, N. Fazeli, M. Bauza, L. Kaelbling, J. Tenenbaum, A. Rodriguez IROS, 2018 (Best Paper Award on Cognitive Robotics) PDF / website We augment an analytical rigid-body simulator with a neural network that learns to model uncertainty as residuals. Best Paper Award on Cognitive Robotics at IROS 2018. |

|

A Zeng, S Song, K. Yu, E. Donlon, F. Hogan, M. Bauza, et. al. ICRA, 2018 (Best Systems Paper Award by Amazon Robotics) PDF / video / website With the MIT-Princeton team we developed a robust robotic system for bin picking. |

|

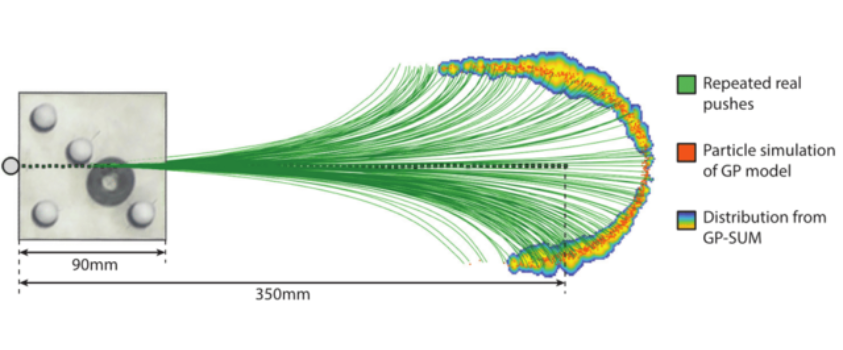

M. Bauza, A. Rodriguez WAFR, 2018 We developed the algorithm GP-SUM: a GP-Bayes filter that propagates in time non-Gaussian beliefs. |

|

M. Bauza, A. Rodriguez ICRA, 2017 Characterizing the uncertainty of different pushes allows better action selection. |

|

K. Yu, M. Bauza, N. Fazeli, and A. Rodriguez IROS, 2016 (Best Paper Finalist at IROS) PDF / video / website More than a million datapoints collected on real pushing experiments. |

|

July 2022 Invited talk at the RSS 2022 workshop on The Science of Bumping Into Things. May 2022 Co-organized the workshop at ICRA 2022 on Bi-manual Manipulation: Addressing Real-world Challenges. March 2022 Invited talks at EPFL, Princeton University, CMU, and University of Pennsylvania. February 2022 Invited talks at Columbia University, the Autonomy Talks at ETH Zurich, and Cornell Tech. December 2021 Invited talk at the Washington University robotics colloquium. November 2021 Invited talks at Stanford and the CMU Manipulation discussion grup. October 2021 Invited talk at Cornell Robotic Seminar and selected to attend the Rising Stars in EECS. July 2021 Attended the 2021 RSS Pioneers Workshop. May 2021 Best Paper Finalist Award on Service Robotics at ICRA 2021 March 2021 Invited talk at University of Toronto, AI in Robotics Seminar. October 2020 Invited talk at University of Pennsylvania, Grasp Seminar. May 2020 Co-organizing workshop at ICRA 2020 on Uncertainty in Contact-Rich Interactions. November 2019 Selected to attend the Global Young Scientists Summit. Awarded to only 5 PhDs from all MIT departments. October 2019 Rising Stars in Mechanical Engineering. Awarded to 30 graduate and postdoctoral women. Januray 2019 Awarded the Facebook Emerging Scholar Award. 21 awardees out of more than 900 applications. December 2018 Awarded the NVIDIA Graduate Fellowship. Given to 10 PhD students from more than 230 applications. |

|

Thanks for sharing this amazing web design. |